Hi All,

I had another conversation with Beck yesterday on the Chat w/ Documents project.

I have shared the video and an AI powered summary of our conversation below, and also wanted to update everyone on a couple of important points from the call.

- Extracting the text with structured data (like the fact that info is in a table) is a resource intensive process. There are faster and simpler ways to do it, but you lose the structure which makes the chat with documents capabilities less accurate.

- With the above in mind we are working on the following solutions. a) having a full powered extraction algorithm that can run in the cloud or on a very powerful computer. b) having a stripped down extraction process that will be less performant but will still run locally on the average modern computer. c) having a middle ground version that balances speed and performance and can run on more expensive local AI setups.

Full discussion below. Questions, comments, concerns, and ideas welcome as always. Just hit the reply button.

Thanks

Dave W.

Video Recording:

AI Powered Summary:

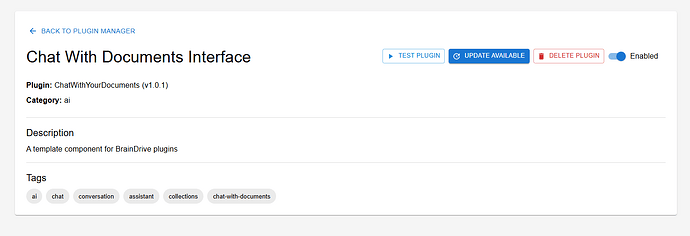

Chat With Your Documents: New Approach, Clear Trade-Offs

Chat With Your Documents: New Approach, Clear Trade-Offs

We’ve been working hard on improving our Chat with Your Documents feature — and in this update, we’re laying out what’s changed, why it matters, and how we’re evolving our approach going forward.

If you’d rather read than watch the latest dev video, here’s the key summary:

What We Found

What We Found

Our initial goal was ambitious: extract rich, structured information from documents locally, using open-source tools like spaCy layout. But we ran into three main challenges:

The core issue? There’s a big difference between extracting plain text and extracting structured text (like tables, headers, and layout-aware elements). The structured approach is far more accurate — but also far more demanding on local hardware.

What We’re Doing Next

What We’re Doing Next

We’re moving to a tiered system with clear trade-offs:

-

Basic Local Mode

→ Extracts plain text only

→ Fastest, most lightweight, works on any modern laptop

→ Limited accuracy (no structure, no context)

-

Intermediate Mode

→ Adds contextual chunking for better RAG (Retrieval-Augmented Generation)

→ Slightly longer processing, still local-friendly

-

Advanced Cloud Mode

→ Full structured extraction with headers, tables, and context

→ 4–5x slower locally, but fast and smooth in the cloud

→ Ideal for serious use (e.g., production apps or high-quality knowledge bases)

Why Cloud-First Makes Sense

Why Cloud-First Makes Sense

Running the most advanced version locally is possible — but only on expensive hardware (think $10,000+ workstations). For most owners, the better option is:

-

Keep your interface and vector database local (if you want)

-

Offload heavy document parsing to a hosted service we’re building

-

Maintain ownership, control, and exit rights — even in the cloud

This is still self-hosted AI, not Big Tech AI.

What’s Coming

What’s Coming

We’re spinning off the structured document processing into a standalone API service. You’ll be able to:

It’s open-source, transparent, and respects your data sovereignty.

Timeline & Next Call

Timeline & Next Call

We expect the first version of the cloud-based document processor to be live within one week. We’re meeting again on Thursday, July 3rd to check in and finalize the integration.

Bigger Picture

Bigger Picture

This shift reflects a broader insight:

Local AI is great—but cloud-hosted, open-source AI is often the practical default.

Like WordPress, BrainDrive can run locally… but most people host it in the cloud. We’ll continue to support both paths.

Let us know what you think, and if you’d like to test things out when they goe live. And as always — your AI, your rules.

— Dave

co-creator, BrainDrive